Meta’s platforms confirmed lots of of “nudify” deepfake adverts, CBS Information investigation finds

Meta has eliminated plenty of adverts selling “nudify” apps — AI instruments used to create sexually specific deepfakes utilizing pictures of actual folks — after a CBS Information investigation discovered lots of of such ads on its platforms.

“We now have strict guidelines in opposition to non-consensual intimate imagery; we eliminated these adverts, deleted the Pages accountable for operating them and completely blocked the URLs related to these apps,” a Meta spokesperson informed CBS Information in an emailed assertion.

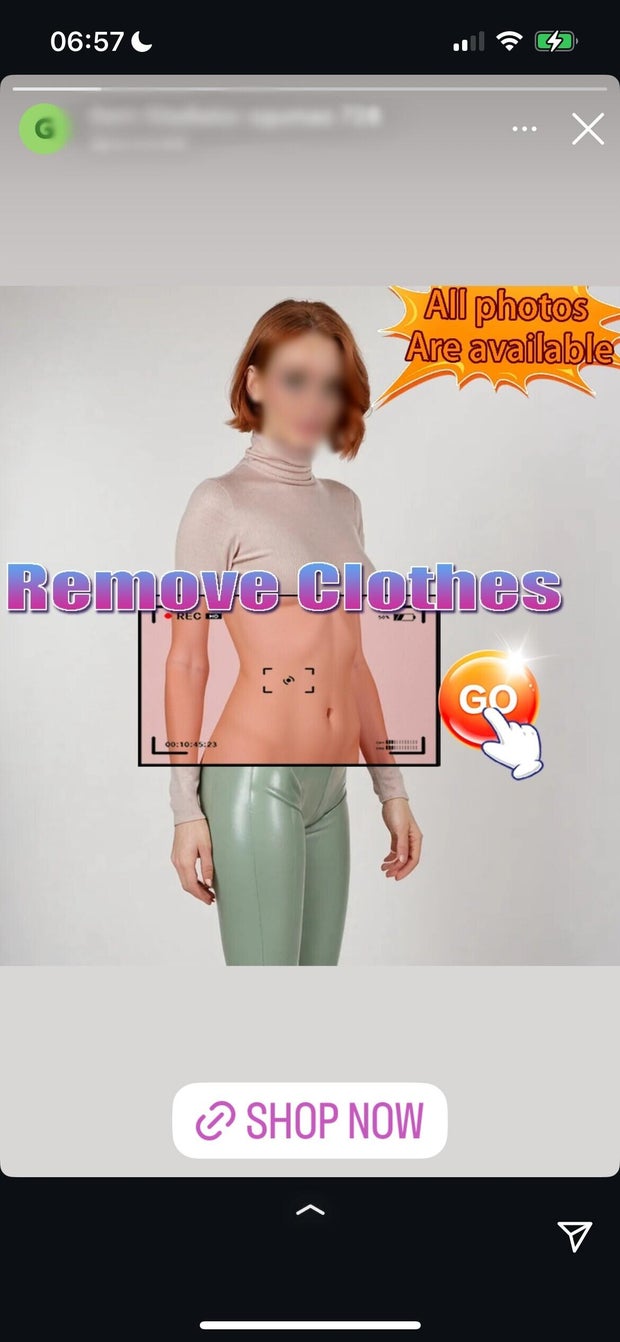

CBS Information uncovered dozens of these adverts on Meta’s Instagram platform, in its “Tales” function, selling AI instruments that, in lots of instances, marketed the power to “add a photograph” and “see anybody bare.” Different adverts in Instagram’s Tales promoted the power to add and manipulate movies of actual folks. One promotional advert even learn “how is that this filter even allowed?” as textual content beneath an instance of a nude deepfake.

One advert promoted its AI product by utilizing extremely sexualized, underwear-clad deepfake pictures of actors Scarlett Johansson and Anne Hathaway. Among the adverts’ URL hyperlinks redirect to web sites that promote the power to animate actual folks’s pictures and get them to carry out intercourse acts. And among the purposes charged customers between $20 and $80 to entry these “unique” and “advance” options. In different instances, an advert’s URL redirected customers to Apple’s app retailer, the place “nudify” apps had been out there to obtain.

An evaluation of the ads in Meta’s advert library discovered that there have been, at a minimal, lots of of those adverts out there throughout the corporate’s social media platforms, together with on Fb, Instagram, Threads, the Fb Messenger software and Meta Viewers Community — a platform that permits Meta advertisers to achieve customers on cell apps and web sites that companion with the corporate.

In line with Meta’s personal Advert Library knowledge, many of those adverts had been particularly focused at males between the ages of 18 and 65, and had been lively in america, European Union and United Kingdom.

A Meta spokesperson informed CBS Information the unfold of this form of AI-generated content material is an ongoing drawback and they’re going through more and more subtle challenges in making an attempt to fight it.

“The folks behind these exploitative apps continually evolve their ways to evade detection, so we’re constantly working to strengthen our enforcement,” a Meta spokesperson stated.

CBS Information discovered that adverts for “nudify” deepfake instruments had been nonetheless out there on the corporate’s Instagram platform even after Meta had eliminated these initially flagged.

Deepfakes are manipulated pictures, audio recordings, or movies of actual folks which were altered with synthetic intelligence to misrepresent somebody as saying or doing one thing that the particular person didn’t really say or do.

Final month, President Trump signed into legislation the bipartisan “Take It Down Act,” which, amongst different issues, requires web sites and social media firms to take away deepfake content material inside 48 hours of discover from a sufferer.

Though the legislation makes it unlawful to “knowingly publish” or threaten to publish intimate pictures with out a particular person’s consent, together with AI-created deepfakes, it doesn’t goal the instruments used to create such AI-generated content material.

These instruments do violate platform security and moderation guidelines carried out by each Apple and Meta on their respective platforms.

Meta’s promoting requirements coverage says, “adverts should not include grownup nudity and sexual exercise. This contains nudity, depictions of individuals in specific or sexually suggestive positions, or actions which can be sexually suggestive.”

Beneath Meta’s “bullying and harassment” coverage, the corporate additionally prohibits “derogatory sexualized photoshop or drawings” on its platforms. The corporate says its rules are supposed to dam customers from sharing or threatening to share nonconsensual intimate imagery.

Apple’s tips for its app retailer explicitly state that “content material that’s offensive, insensitive, upsetting, supposed to disgust, in exceptionally poor style, or simply plain creepy” is banned.

Alexios Mantzarlis, director of the Safety, Belief, and Security Initiative at Cornell College’s tech analysis heart, has been learning the surge in AI deepfake networks advertising on social platforms for greater than a yr. He informed CBS Information in a telephone interview on Tuesday that he’d seen 1000’s extra of those adverts throughout Meta platforms, in addition to on platforms reminiscent of X and Telegram, throughout that interval.

Though Telegram and X have what he described as a structural “lawlessness” that permits for this form of content material, he believes Meta’s management lacks the desire to deal with the difficulty, regardless of having content material moderators in place.

“I do suppose that belief and security groups at these firms care. I do not suppose, frankly, that they care on the very prime of the corporate in Meta’s case,” he stated. “They’re clearly under-resourcing the groups that need to battle these items, as a result of as subtle as these [deepfake] networks are … they do not have Meta cash to throw at it.”

Mantzarlis additionally stated that he present in his analysis that “nudify” deepfake mills can be found to obtain on each Apple’s app retailer and Google’s Play retailer, expressing frustration with these large platforms’ incapability to implement such content material.

“The issue with apps is that they’ve this dual-use entrance the place they current on the app retailer as a enjoyable approach to face swap, however then they’re advertising on Meta as their main goal being nudification. So when these apps come up for overview on the Apple or Google retailer, they do not essentially have the wherewithal to ban them,” he stated.

“There must be cross-industry cooperation the place if the app or the web site markets itself as a software for nudification on anyplace on the internet, then everybody else will be like, ‘All proper, I do not care what you current your self as on my platform, you are gone,'” Mantzarlis added.

CBS Information has reached out to each Apple and Google for remark as to how they average their respective platforms. Neither firm had responded by the point of writing.

Main tech firms’ promotion of such apps raises critical questions on each person consent and about on-line security for minors. A CBS Information evaluation of 1 “nudify” web site promoted on Instagram confirmed that the location didn’t immediate any type of age verification previous to a person importing a photograph to generate a deepfake picture.

Such points are widespread. In December, CBS Information’ 60 Minutes reported on the shortage of age verification on some of the common websites utilizing synthetic intelligence to generate faux nude images of actual folks.

Regardless of guests being informed that they have to be 18 or older to make use of the location, and that “processing of minors is not possible,” 60 Minutes was capable of instantly acquire entry to importing images as soon as the person clicked “settle for” on the age warning immediate, with no different age verification essential.

Knowledge additionally exhibits {that a} excessive proportion of underage youngsters have interacted with deepfake content material. A March 2025 research performed by the youngsters’s safety nonprofit Thorn confirmed that amongst teenagers, 41% stated that they had heard of the time period “deepfake nudes,” whereas 10% reported personally understanding somebody who had had deepfake nude imagery created of them.