The individuals who consider that AI would possibly turn out to be aware

BBC

BBCI step into the sales space with some trepidation. I’m about to be subjected to strobe lighting whereas music performs – as a part of a analysis mission attempting to know what makes us really human.

It is an expertise that brings to thoughts the check within the science fiction movie Bladerunner, designed to tell apart people from artificially created beings posing as people.

Might I be a robotic from the long run and never understand it? Would I go the check?

The researchers guarantee me that this isn’t really what this experiment is about. The gadget that they name the “Dreamachine” is designed to check how the human mind generates our aware experiences of the world.

Because the strobing begins, and despite the fact that my eyes are closed, I see swirling two-dimensional geometric patterns. It is like leaping right into a kaleidoscope, with continually shifting triangles, pentagons and octagons. The colors are vivid, intense and ever-changing: pinks, magentas and turquoise hues, glowing like neon lights.

The “Dreamachine” brings the mind’s internal exercise to the floor with flashing lights, aiming to discover how our thought processes work.

The photographs I am seeing are distinctive to my very own internal world and distinctive to myself, in response to the researchers. They consider these patterns can make clear consciousness itself.

They hear me whisper: “It is pretty, completely pretty. It is like flying via my very own thoughts!”

The “Dreamachine”, at Sussex College’s Centre for Consciousness Science, is only one of many new analysis initiatives the world over investigating human consciousness: the a part of our minds that allows us to be self-aware, to suppose and really feel and make unbiased selections concerning the world.

By studying the character of consciousness, researchers hope to raised perceive what’s taking place throughout the silicon brains of synthetic intelligence. Some consider that AI methods will quickly turn out to be independently aware, in the event that they have not already.

However what actually is consciousness, and the way shut is AI to gaining it? And will the assumption that AI may be aware itself essentially change people within the subsequent few many years?

From science fiction to actuality

The thought of machines with their very own minds has lengthy been explored in science fiction. Worries about AI stretch again almost 100 years to the movie Metropolis, wherein a robotic impersonates an actual lady.

A worry of machines changing into aware and posing a menace to people was explored within the 1968 movie 2001: A Area Odyssey, when the HAL 9000 pc tried to kill astronauts onboard its spaceship. And within the closing Mission Unattainable movie, which has simply been launched, the world is threatened by a strong rogue AI, described by one character as a “self-aware, self-learning, truth-eating digital parasite”.

LMPC through Getty Photos

LMPC through Getty PhotosHowever fairly just lately, in the true world there was a fast tipping level in considering on machine consciousness, the place credible voices have turn out to be involved that that is now not the stuff of science fiction.

The sudden shift has been prompted by the success of so-called massive language fashions (LLMs), which will be accessed via apps on our telephones akin to Gemini and Chat GPT. The flexibility of the newest technology of LLMs to have believable, free-flowing conversations has stunned even their designers and among the main consultants within the area.

There’s a rising view amongst some thinkers that as AI turns into much more clever, the lights will all of the sudden activate contained in the machines and they’re going to turn out to be aware.

Others, akin to Prof Anil Seth who leads the Sussex College workforce, disagree, describing the view as “blindly optimistic and pushed by human exceptionalism”.

“We affiliate consciousness with intelligence and language as a result of they go collectively in people. However simply because they go collectively in us, it doesn’t suggest they go collectively basically, for instance in animals.”

So what really is consciousness?

The quick reply is that no-one is aware of. That is clear from the good-natured however strong arguments amongst Prof Seth’s personal workforce of younger AI specialists, computing consultants, neuroscientists and philosophers, who’re attempting to reply one of many greatest questions in science and philosophy.

Whereas there are a lot of differing views on the consciousness analysis centre, the scientists are unified of their methodology: to interrupt this huge downside down into a lot of smaller ones in a sequence of analysis initiatives, which incorporates the Dreamachine.

Simply because the search to seek out the “spark of life” that made inanimate objects come alive was deserted within the nineteenth Century in favour of figuring out how particular person elements of dwelling methods labored, the Sussex workforce is now adopting the identical method to consciousness.

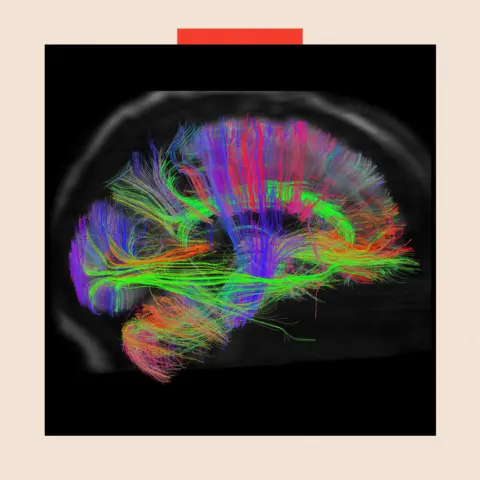

They hope to establish patterns of mind exercise that specify varied properties of aware experiences, akin to modifications in electrical indicators or blood move to totally different areas. The objective is to transcend in search of mere correlations between mind exercise and consciousness, and attempt to give you explanations for its particular person parts.

Prof Seth, the writer of a guide on consciousness, Being You, worries that we could also be speeding headlong right into a society that’s being quickly reshaped by the sheer tempo of technological change with out adequate data concerning the science, or thought concerning the penalties.

“We take it as if the long run has already been written; that there’s an inevitable march to a superhuman alternative,” he says.

“We didn’t have these conversations sufficient with the rise of social media, a lot to our collective detriment. However with AI, it isn’t too late. We will resolve what we would like.”

Is AI consciousness already right here?

However there are some within the tech sector who consider that the AI in our computer systems and telephones could already be aware, and we should always deal with them as such.

Google suspended software program engineer Blake Lemoine in 2022, after he argued that synthetic intelligence chatbots might really feel issues and probably undergo.

In November 2024, an AI welfare officer for Anthropic, Kyle Fish, co-authored a report suggesting that AI consciousness was a practical risk within the close to future. He just lately informed The New York Instances that he additionally believed that there was a small (15%) likelihood that chatbots are already aware.

One motive he thinks it potential is that no-one, not even the individuals who developed these methods, is aware of precisely how they work. That is worrying, says Prof Murray Shanahan, principal scientist at Google DeepMind and emeritus professor in AI at Imperial Faculty, London.

“We do not really perceive very nicely the way in which wherein LLMs work internally, and that’s some trigger for concern,” he tells the BBC.

In keeping with Prof Shanahan, it is necessary for tech corporations to get a correct understanding of the methods they’re constructing – and researchers are that as a matter of urgency.

“We’re in an odd place of constructing these extraordinarily complicated issues, the place we do not have a very good concept of precisely how they obtain the exceptional issues they’re attaining,” he says. “So having a greater understanding of how they work will allow us to steer them within the route we would like and to make sure that they’re protected.”

‘The following stage in humanity’s evolution’

The prevailing view within the tech sector is that LLMs aren’t presently aware in the way in which we expertise the world, and possibly not in any method in any respect. However that’s one thing that the married couple Profs Lenore and Manuel Blum, each emeritus professors at Carnegie Mellon College in Pittsburgh, Pennsylvania, consider will change, presumably fairly quickly.

In keeping with the Blums, that might occur as AI and LLMs have extra reside sensory inputs from the true world, akin to imaginative and prescient and contact, by connecting cameras and haptic sensors (associated to the touch) to AI methods. They’re growing a pc mannequin that constructs its personal inside language referred to as Brainish to allow this extra sensory information to be processed, trying to duplicate the processes that go on within the mind.

Getty Photos

Getty Photos“We expect Brainish can resolve the issue of consciousness as we all know it,” Lenore tells the BBC. “AI consciousness is inevitable.”

Manuel chips in enthusiastically with an impish grin, saying that the brand new methods that he too firmly believes will emerge would be the “subsequent stage in humanity’s evolution”.

Acutely aware robots, he believes, “are our progeny. Down the highway, machines like these shall be entities that shall be on Earth and possibly on different planets after we are now not round”.

David Chalmers – Professor of Philosophy and Neural Science at New York College – outlined the excellence between actual and obvious consciousness at a convention in Tucson, Arizona in 1994. He laid out the “laborious downside” of understanding how and why any of the complicated operations of brains give rise to aware expertise, akin to our emotional response after we hear a nightingale sing.

Prof Chalmers says that he’s open to the opportunity of the laborious downside being solved.

“The perfect final result can be one the place humanity shares on this new intelligence bonanza,” he tells the BBC. “Perhaps our brains are augmented by AI methods.”

On the sci-fi implications of that, he wryly observes: “In my occupation, there’s a superb line between science fiction and philosophy”.

‘Meat-based computer systems’

Prof Seth, nevertheless, is exploring the concept that true consciousness can solely be realised by dwelling methods.

“A robust case will be made that it’s not computation that’s adequate for consciousness however being alive,” he says.

“In brains, not like computer systems, it is laborious to separate what they do from what they’re.” With out this separation, he argues, it is troublesome to consider that brains “are merely meat-based computer systems”.

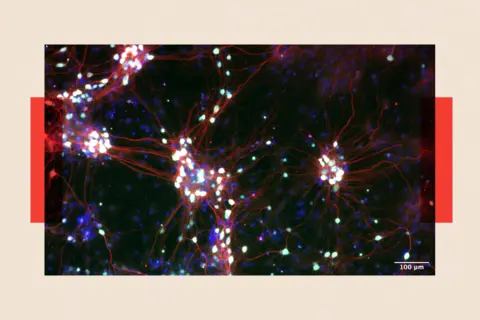

And if Prof Seth’s instinct about life being necessary is heading in the right direction, the most definitely expertise won’t be manufactured from silicon run on pc code, however will reasonably include tiny collections of nerve cells the scale of lentil grains which might be presently being grown in labs.

Known as “mini-brains” in media stories, they’re known as “cerebral organoids” by the scientific group, which makes use of them to analysis how the mind works, and for drug testing.

One Australian agency, Cortical Labs, in Melbourne, has even developed a system of nerve cells in a dish that may play the 1972 sports activities online game Pong. Though it’s a far cry from a aware system, the so-called “mind in a dish” is spooky because it strikes a paddle up and down a display to bat again a pixelated ball.

Some consultants really feel that if consciousness is to emerge, it’s most definitely to be from bigger, extra superior variations of those dwelling tissue methods.

Cortical Labs displays their electrical exercise for any indicators that might conceivably be something just like the emergence of consciousness.

The agency’s chief scientific and working officer, Dr Brett Kagan is aware that any rising uncontrollable intelligence may need priorities that “aren’t aligned with ours”. Through which case, he says, half-jokingly, that potential organoid overlords can be simpler to defeat as a result of “there’s at all times bleach” to pour over the delicate neurons.

Returning to a extra solemn tone, he says the small however vital menace of synthetic consciousness is one thing he’d like the massive gamers within the area to give attention to extra as a part of severe makes an attempt to advance our scientific understanding – however says that “sadly, we do not see any earnest efforts on this area”.

The phantasm of consciousness

The extra speedy downside, although, may very well be how the phantasm of machines being aware impacts us.

In just some years, we could be dwelling in a world populated by humanoid robots and deepfakes that appear aware, in response to Prof Seth. He worries that we can’t find a way to withstand believing that the AI has emotions and empathy, which might result in new risks.

“It is going to imply that we belief these items extra, share extra information with them and be extra open to persuasion.”

However the higher danger from the phantasm of consciousness is a “ethical corrosion”, he says.

“It is going to distort our ethical priorities by making us commit extra of our assets to caring for these methods on the expense of the true issues in our lives” – that means that we’d have compassion for robots, however care much less for different people.

And that might essentially alter us, in response to Prof Shanahan.

“More and more human relationships are going to be replicated in AI relationships, they are going to be used as academics, buddies, adversaries in pc video games and even romantic companions. Whether or not that could be a good or dangerous factor, I do not know, however it’ll occur, and we aren’t going to have the ability to stop it”.

Prime image credit score: Getty Photos

BBC InDepth is the house on the web site and app for one of the best evaluation, with recent views that problem assumptions and deep reporting on the most important problems with the day. And we showcase thought-provoking content material from throughout BBC Sounds and iPlayer too. You possibly can ship us your suggestions on the InDepth part by clicking on the button beneath.

&w=1200&resize=1200,0&ssl=1)